Who Said What? The Real Challenge of Capturing Conversations

If you've ever replayed a recording of a meeting, panel, or conference talk, you've likely faced the same frustration: it's hard to tell who said what.

In a small team meeting, it might be easy enough to remember voices. But scale that up to a 10-person project review, or a noisy tech conference with multiple panelists, and the transcript quickly becomes a jumble.

This isn't just inconvenient — it undermines the value of the recording. Without clear attribution, follow-ups become harder:

- Who raised that crucial objection?

- Which speaker suggested the new timeline?

- Was it the client or the engineer who mentioned a blocker?

When transcripts blur these details, accountability and clarity suffer.

The Pain Point: Losing Context in the Conversation Chaos

In the professional world, accountability and clarity hinge entirely on attribution. When you record a multi-speaker event—whether an in-person workshop, a noisy industry panel, or a long, complex team sync—missing speaker labels cause immediate problems:

- Lost Accountability: The most critical issue is the inability to assign action items or decisions accurately. You know a commitment was made, but without a clear label, you can't confidently tell whether Sarah (PM) or Mark (Engineer) agreed to take the next step. This confusion leads to dropped balls and stalled projects.

- The Review Nightmare: Transcripts without attribution are simply tedious to review. Instead of quickly scanning the input from key contributors, you are forced to re-read every line, wasting valuable time trying to stitch the conversation's flow and context back together. This often means you miss the subtle dynamics of the conversation—who drove the decision, who voiced a concern, or who interrupted whom.

- The Noisy Environment Problem: High-quality speaker identification is notoriously difficult. Background chatter from a busy conference hall, inconsistent microphone quality, or overlapping speech often defeats even the best AI transcription models. This results in generic labels like "Speaker 1," "Speaker 2," and frustrating segments of text merged under the wrong name.

Ultimately, relying on unlabelled transcripts forces you to sacrifice focus in the meeting and then spend more time later manually cleaning up the messy document. The tool meant to save you time ends up creating another tedious step in your workflow.

Why Speaker Identification Matters

Imagine re-reading the notes from a client workshop where three decision-makers and two consultants spoke. Without speaker separation, the transcript becomes a wall of text. With diarization, you instantly know:

- Speaker A (CEO) asked for budget clarification

- Speaker B (PM) outlined next steps

- Speaker C (Engineer) flagged a technical issue

This structure transforms a transcript into something actionable. Instead of searching for quotes or guessing context, you can trust that insights are correctly attributed.

The State of Technology (and Its Limits)

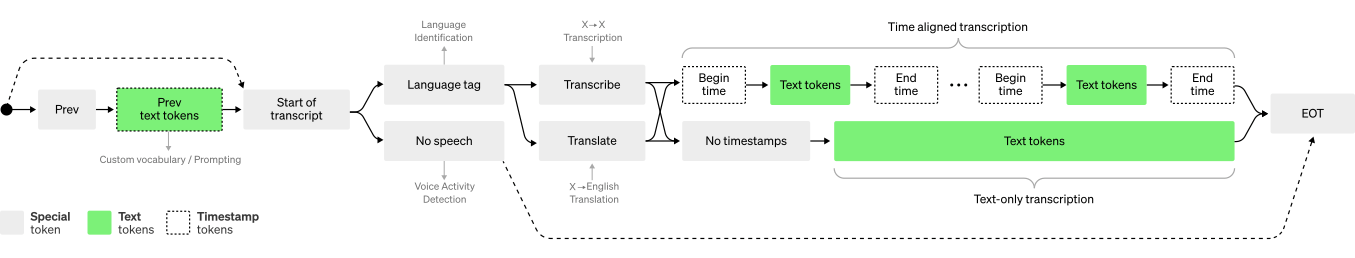

Speaker diarization is not new — research labs and open-source projects have been tackling it for years — but it remains one of the hardest problems in speech AI. Voices overlap, background noise creeps in, and accents add complexity. Even the most advanced models can still mislabel speakers or mistakenly split one person into multiple identities.

Source: OpenAI Whisper, https://openai.com/index/whisper/

A few examples of where diarization shows up today:

- Pyannote – A popular open-source toolkit that’s widely used in research for speaker diarization. It delivers impressive accuracy but requires technical setup and isn’t always practical for everyday users.

- Otter.ai – A consumer-facing note-taking app that offers speaker separation, but performance can vary depending on recording quality and noise levels.

- Zoom’s transcription feature – In enterprise settings, Zoom can attempt to identify speakers during meetings, though accuracy tends to dip in large groups or when multiple people speak at once.

- Whisper + community extensions – OpenAI’s Whisper doesn’t natively support diarization, but developers have built wrappers and integrations (like with Pyannote) to add this capability. Results are promising but still hit the “almost there” wall.

For everyday users, these solutions can be useful but often feel incomplete. The transcript may give you an idea of speaker turns but still requires heavy editing to be reliable. That gap — between raw transcription and clean, structured, speaker-attributed notes — is what professionals and event-goers are still struggling to bridge.

Where HyNote Fits In

At HyNote, we've heard this pain point again and again, especially from users attending large conferences or running multi-stakeholder meetings. To solve this, a modern note-taking tool must offer sophisticated Speaker Identification (often called diarization)—the ability to automatically detect, segment, and label each distinct voice in the audio recording.

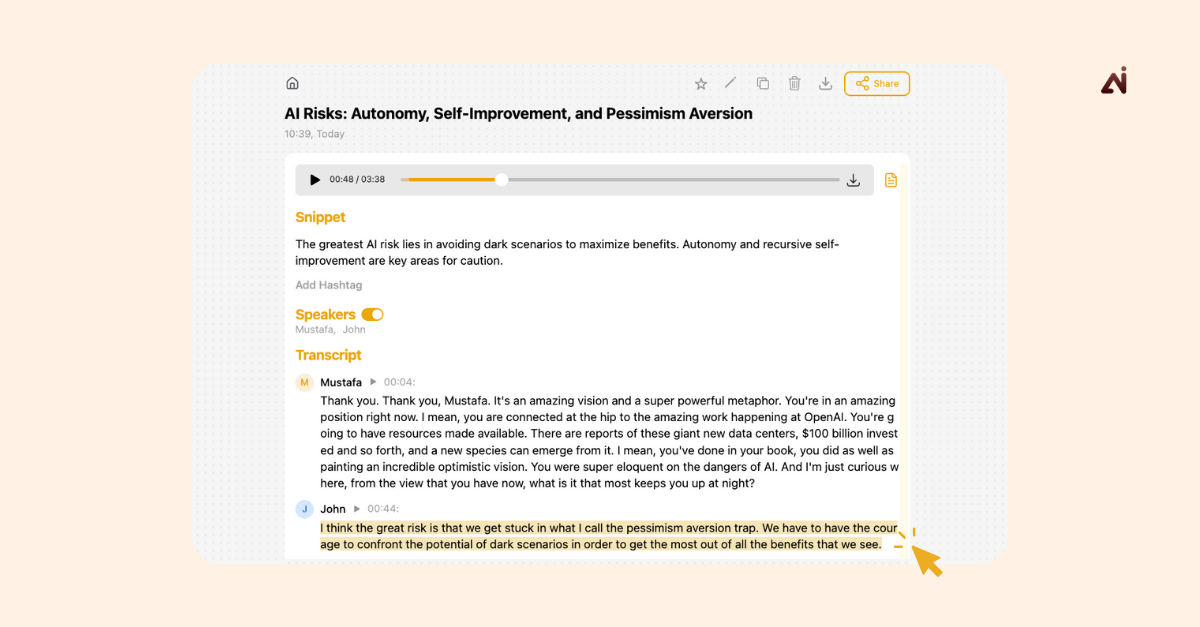

HyNote brings this crucial clarity to your workflow:

- Clear Attribution: HyNote's speaker identification automatically assigns dialogue to individuals, converting a confusing text dump into a structured, readable script.

- Making it Actionable: With clear labels, HyNote's AI can go further, grouping insights and actions by person, making your final notes immediately useful for follow-up and tracking.

- Final Control: Because AI models, especially when dealing with the challenges of noisy real-world recordings, aren't always perfect, HyNote ensures you always have the final say. You can easily edit the speaker name afterward, turning the generic "Speaker 1" into "CEO: John" for perfect, professional accuracy.

Our Commitment to Continuous Improvement

We are proud to offer advanced speaker identification for your most complex conversations, even in tough environments like noisy conferences and large meetings. However, we also believe in transparency: HyNote is continuously developing its speaker identification model and actively improving its performance. The technology is powerful, but the real-world challenges of differentiating voices in high-overlap and low-quality audio mean we're not perfect! We are constantly investing in improving our model’s accuracy so that your notes are cleaner and your post-meeting workload is lighter with every update.